Setting Up a Kubernetes Cluster with 3 Servers: A Complete Guide

Introduction

Kubernetes (K8s) is a powerful container orchestration platform that automates the deployment, scaling, and management of containerized applications. In this comprehensive guide, we'll walk through setting up a production-ready Kubernetes cluster using three servers - one master node and two worker nodes.

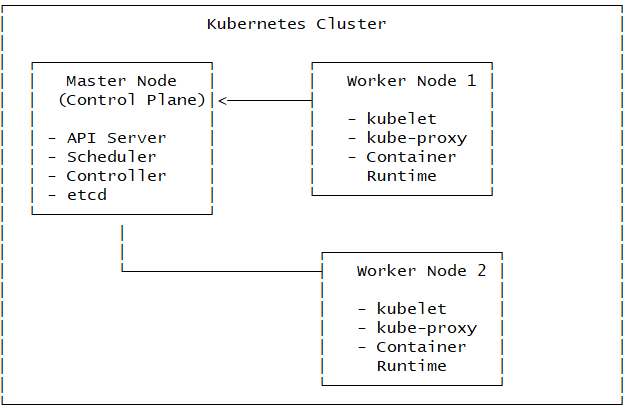

Architecture Overview

Our cluster will consist of:

- 1 Master Node (Control Plane): Manages the cluster, API server, scheduler, and etcd database

- 2 Worker Nodes: Run application workloads and pods

Prerequisites

System Requirements

- Operating System: Ubuntu 20.04 LTS or later (this guide uses Ubuntu)

- Hardware:

- Master Node: 2 CPU cores, 2GB RAM, 20GB storage

- Worker Nodes: 1 CPU core, 1GB RAM, 20GB storage

- Network: All nodes must be able to communicate with each other

- Root Access: sudo privileges on all nodes

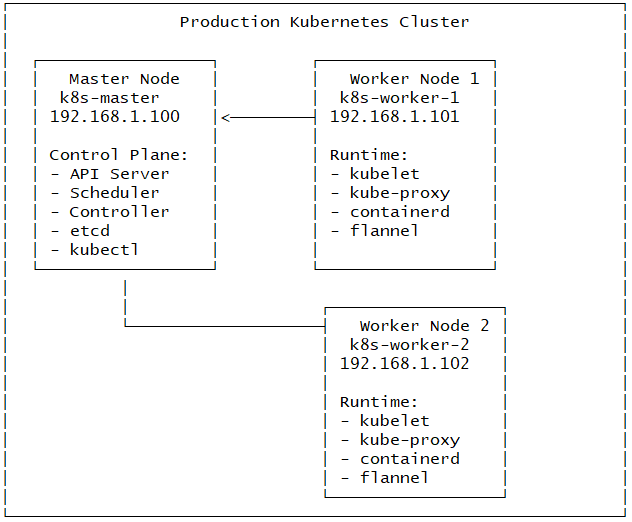

Server Information

For this guide, we'll use these example servers:

- Master Node:

k8s-master (IP: 192.168.1.100)

- Worker Node 1:

k8s-worker-1 (IP: 192.168.1.101)

- Worker Node 2:

k8s-worker-2 (IP: 192.168.1.102)

Step 1: Initial Server Setup

1.1 Update System Packages

Run on all three servers:

# Update package lists

sudo apt update && sudo apt upgrade -y

# Install required packages

sudo apt install -y apt-transport-https ca-certificates curl software-properties-common

1.2 Configure Hostnames

Set up proper hostnames for each server:

# On master node

sudo hostnamectl set-hostname k8s-master

# On worker node 1

sudo hostnamectl set-hostname k8s-worker-1

# On worker node 2

sudo hostnamectl set-hostname k8s-worker-2

1.3 Update /etc/hosts File

Add entries on all nodes:

sudo nano /etc/hosts

Add these lines:

192.168.1.100 k8s-master 192.168.1.101 k8s-worker-1 192.168.1.102 k8s-worker-2

1.4 Disable Swap

Kubernetes requires swap to be disabled:

# Disable swap temporarily

sudo swapoff -a

# Disable swap permanently

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

Step 2: Install Container Runtime (containerd)

2.1 Install containerd

Run on all nodes:

# Add Docker's official GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# Add Docker repository

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# Update package lists

sudo apt update

# Install containerd

sudo apt install -y containerd.io

2.2 Configure containerd

# Create containerd configuration directory

sudo mkdir -p /etc/containerd

# Generate default configuration

containerd config default | sudo tee /etc/containerd/config.toml

# Enable SystemdCgroup

sudo sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/g' /etc/containerd/config.toml

# Restart and enable containerd

sudo systemctl restart containerd

sudo systemctl enable containerd

Step 3: Install Kubernetes Components

3.1 Add Kubernetes Repository

Run on all nodes:

# Add Kubernetes signing key

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

# Add Kubernetes repository

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

3.2 Install Kubernetes Tools

# Update package lists

sudo apt update

# Install kubelet, kubeadm, and kubectl

sudo apt install -y kubelet kubeadm kubectl

# Hold packages to prevent automatic updates

sudo apt-mark hold kubelet kubeadm kubectl

# Enable kubelet service

sudo systemctl enable kubelet

Step 4: Configure Kernel Parameters

4.1 Enable IP Forwarding

Run on all nodes:

# Load required kernel modules

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# Configure sysctl parameters

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl parameters

sudo sysctl --system

Step 5: Initialize Kubernetes Master Node

5.1 Initialize the Cluster

Run only on the master node:

# Initialize Kubernetes cluster

sudo kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.1.100

# The output will show a join command like:

# kubeadm join 192.168.1.100:6443 --token <token> --discovery-token-ca-cert-hash sha256:<hash>

Important: Save the join command from the output - you'll need it for worker nodes!

5.2 Configure kubectl for Regular User

# Create .kube directory

mkdir -p $HOME/.kube

# Copy admin configuration

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# Change ownership

sudo chown $(id -u):$(id -g) $HOME/.kube/config

5.3 Verify Master Node Status

# Check cluster status

kubectl get nodes

# Check system pods

kubectl get pods -n kube-system

Step 6: Install Pod Network Add-on (Flannel)

6.1 Deploy Flannel Network

Run on master node:

# Apply Flannel manifest

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

# Wait for flannel pods to be ready

kubectl get pods -n kube-flannel-system

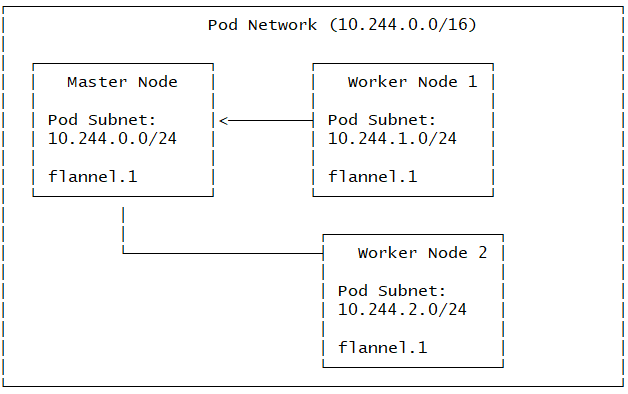

Network Architecture After Flannel Installation:

Step 7: Join Worker Nodes to Cluster

7.1 Join Worker Nodes

Run on both worker nodes using the join command from Step 5.1:

# Replace with your actual join command from master initialization

sudo kubeadm join 192.168.1.100:6443 --token <your-token> --discovery-token-ca-cert-hash sha256:<your-hash>

7.2 Verify Cluster Status

Run on master node:

# Check all nodes

kubectl get nodes

# Expected output:

# NAME STATUS ROLES AGE VERSION

# k8s-master Ready control-plane 10m v1.28.x

# k8s-worker-1 Ready <none> 5m v1.28.x

# k8s-worker-2 Ready <none> 5m v1.28.x

# Check cluster info

kubectl cluster-info

# Check system components

kubectl get pods -n kube-system

Step 8: Test Your Cluster

8.1 Deploy a Test Application

# Create a simple nginx deployment

kubectl create deployment nginx-test --image=nginx

# Expose the deployment

kubectl expose deployment nginx-test --port=80 --type=NodePort

# Check the service

kubectl get services

# Get detailed service info

kubectl describe service nginx-test

8.2 Verify Pod Distribution

# Check where pods are running

kubectl get pods -o wide

# Scale the deployment to see distribution

kubectl scale deployment nginx-test --replicas=3

# Check distribution again

kubectl get pods -o wide

Step 9: Cluster Management Commands

9.1 Useful kubectl Commands

# Get cluster information

kubectl cluster-info

# View all resources

kubectl get all

# Check node details

kubectl describe nodes

# Monitor resources

kubectl top nodes # Requires metrics-server

# View logs

kubectl logs <pod-name>

# Execute commands in pods

kubectl exec -it <pod-name> -- /bin/bash

9.2 Troubleshooting Commands

# Check kubelet logs

sudo journalctl -u kubelet

# Check containerd logs

sudo journalctl -u containerd

# Reset a node (if needed)

sudo kubeadm reset

# Generate new join token

kubeadm token create --print-join-command

Final Cluster Architecture

Security Considerations

10.1 Basic Security Setup

# Create a service account for applications

kubectl create serviceaccount app-service-account

# Create role-based access control (RBAC)

kubectl create clusterrole app-role --verb=get,list,watch --resource=pods

# Bind role to service account

kubectl create clusterrolebinding app-binding --clusterrole=app-role --serviceaccount=default:app-service-account

10.2 Network Policies

# Example network policy (save as network-policy.yaml)

cat <<EOF > network-policy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

EOF

# Apply network policy

kubectl apply -f network-policy.yaml

Monitoring and Maintenance

11.1 Install Metrics Server

# Install metrics server for resource monitoring

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

# Check metrics server

kubectl get pods -n kube-system | grep metrics-server

# View resource usage

kubectl top nodes

kubectl top pods

11.2 Backup etcd

# Create etcd backup script

sudo mkdir -p /backup

# Backup etcd data

sudo ETCDCTL_API=3 etcdctl snapshot save /backup/etcd-backup-$(date +%Y%m%d-%H%M%S).db \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key

|